How to make your AI workflows efficient, ethical, and secure

A growing number of UK businesses are adopting artificial intelligence to speed up tasks and improve decision-making. Yet many still lack clear guidance on how to use it safely. A recent survey shows that around 37% of employees use generative AI tools without permission or supervision, creating a real risk of data leaks, compliance breaches, and reputational damage.

These risks do not come from the technology itself but from not having a clear AI use policy. When AI tools are introduced without structure, even well-intentioned employees can expose sensitive information or rely on unverified outputs. For small and mid-sized teams, this can undermine trust in both their data and their processes.

Below, we share how to make AI workflows work without putting business or customer data at risk.

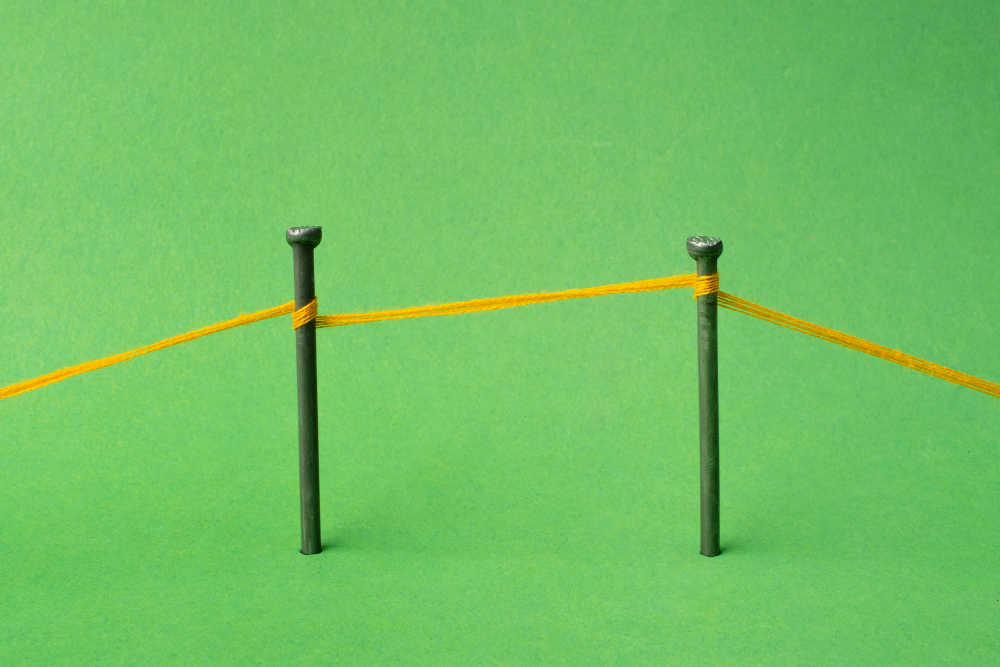

Building clear guardrails with an AI use policy

More than 54% of UK businesses have no formal policy to guide how AI tools are used at work. Without structure, employees are often left to decide what is safe, which can create inconsistency and risk.

A clear AI use policy prevents this by setting shared expectations. It defines what kind of data can be used, which tools are approved, and how results are reviewed before being shared. It also gives your team a common language to talk about AI use responsibly, even when not everyone has a technical background.

Core guardrails for responsible AI use

Core guardrails for responsible AI use

These three pillars create a simple, repeatable framework that makes AI use safer across your organisation.

Data input

Many ethical problems with AI start at this point. Sensitive data should never be entered into public, general-purpose AI tools. This includes confidential, financial, or personally identifiable information such as client names, strategies, or account details.

When your team needs to process this type of data, it should happen through approved AI platforms that guarantee privacy and do not use your inputs to train their models.

Tool approval

Every AI tool used in your organisation should go through a short review. Your IT or leadership team can check how each platform stores and manages data before it becomes part of your daily workflow. By ensuring every tool is vetted, you protect your business and your employees from accidental exposure.

The simplest principle applies here: if a tool has not been approved, treat all information entered into it as public.

Oversight

AI helps generate ideas, drafts, and summaries, but it still requires human judgement. A relevant team member should review every output before it is published, shared, or sent to a client.

This step protects your business from errors caused by bias, missing context, or incorrect assumptions. It also strengthens accountability, ensuring your team remains in control of the results they deliver.

When these three guardrails are part of your process, AI becomes a secure advantage rather than a potential risk. Your team can move faster, make better decisions, and work with confidence knowing their tools and actions meet agreed standards for safety and reliability.

Designing AI workflows around least-privilege access

Designing AI workflows around least-privilege access

Many businesses begin using AI informally, often through copy-and-paste routines that feel harmless. An employee might paste client notes into a public AI tool to create a summary or draft an email. Over time, these quick actions can add up, quietly exposing sensitive information outside the company’s control.

Policies create direction, but technical structure puts that direction into practice.

A well-designed workflow ensures that AI tools only access what they need, keeping everything else protected. This approach follows the least-privilege principle, which limits data access to the minimum necessary for each task.

Here are two ways to structure your AI workflows around least-privilege access:

Segment data access

When automating a process such as summarising support tickets, the AI system should only receive the information required for that single function. It does not need access to unrelated data stored elsewhere in your CRM or internal drives.

Segmentation limits exposure and keeps sensitive records separate from everyday operations. It is a simple, technical step that reinforces your responsibility to protect customer data.

Anonymise where possible

Before AI interacts with any dataset, sensitive details such as names, account numbers, or client identifiers can be replaced with neutral labels.

For example, if your team uses AI to summarise customer reviews, anonymised tags like “Customer A” or “Order 104” are often sufficient. The insight remains accurate, but privacy risks are removed.

Anonymisation also helps prevent ethical problems with AI, including accidental bias or misuse of information. It shows your team that security and efficiency can coexist within the same process.

When your AI workflows are designed this way, security becomes a built-in feature. Your team works faster and more confidently, knowing that each process respects privacy, follows clear boundaries, and aligns with your wider AI use policy.

Keeping your AI use policy alive

Keeping your AI use policy alive

An AI use policy is not something to set once and leave behind. As tools evolve, your policy and workflows need to evolve with them.

A good review cycle helps you check whether privacy settings remain strong, whether access rules still fit your processes, and whether employees feel supported by the tools they use. These check-ins are what keep improved workflows reliable and safe.

By treating improvement as part of everyday governance, your organisation stays ahead of risks while building confidence in how AI supports your people. Over time, these small reviews strengthen accountability, protect customer data, and show that progress and responsibility can move together.

Turning AI guardrails into lasting confidence

Every new technology brings both potential and pressure. AI has quickly become part of daily work, and many teams now rely on it to move faster. Yet, without structure, that same speed can create uncertainty.

When AI processes are built on a clear AI use policy, secure workflow design, and regular review cycles, your team can innovate responsibly.

You and your team take smarter risks, ideas move faster, and technology becomes a trusted extension of how progress happens. This is the real advantage that appears when AI no longer feels like an experiment but a reliable part of how your business thinks and works.

At Adapt, we help small businesses design AI systems that are safe, transparent, and tailored to how their teams already work. Whether you are formalising your first AI use policy or refining existing systems, our goal is to make AI an advantage you can rely on.